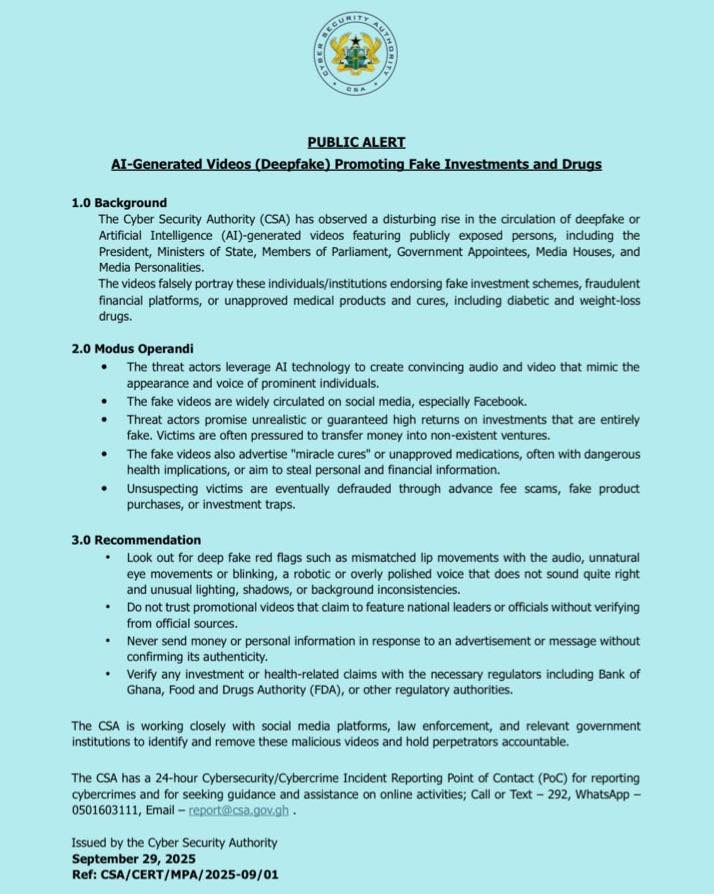

The Cyber Security Authority (CSA) has cautioned the public about a disturbing surge in the circulation of AI-generated videos, commonly known as deepfakes, being used to promote fake investment schemes and unapproved drugs.

According to the Authority, the videos, which feature manipulated images and voices of high-profile personalities including the President, Ministers of State, Members of Parliament, and media figures, are being widely shared on social media platforms such as Facebook to deceive unsuspecting victims.

“These deepfake videos are designed to look very real, and that is what makes them so dangerous,” a CSA spokesperson said. “They falsely portray respected individuals endorsing fake financial platforms or miracle drugs, but in reality, they are scams targeted at defrauding the public.”

The Authority noted that victims are often lured into transferring money into non-existent accounts with the promise of high returns, while others fall prey to the purchase of fake medical products.

CSA has therefore advised the public to be vigilant and look out for red flags such as mismatched lip movements, unnatural eye blinking, or unusual lighting in promotional videos. “We strongly urge citizens not to send money or personal information in response to any advertisement without first confirming its authenticity,” the spokesperson added.

The Authority further recommended that individuals verify any investment or health-related claims with regulatory bodies such as the Bank of Ghana and the Food and Drugs Authority before taking action.

“The public must remember that if an offer sounds too good to be true, it usually is. We encourage everyone to report suspicious content immediately so perpetrators can be tracked and held accountable,” the CSA emphasized.

The Authority has set up a 24-hour Cybersecurity/Cybercrime Incident Reporting Point of Contact for individuals seeking assistance with suspected scams online.

See full statement below